Here are the show notes from Monday Hot Takes Episode 5, recorded on 7/13/2020. Did you know you can virtualize GPUs for compute (for ML/DL/AI)? You can! On Google Cloud, as well as on-premises with VMware (Bitfusion makes that pretty easy!). More in this episode!

Hot Take 1: Virtualize GPUs with Google Compute Engine VMs

Google had big announcements last week leading up to this Google Next this week, including announcing a Compute Engine A2 (Accelerator Optimized) Virtual Machines with NVIDIA Ampere A100 GPUs in the google enterprise cloud. This is the 1st A100 based offering in the public cloud. You can get up to 16 GPUs with 1 VM. They make the multi-GPU workloads faster with NVIDIA HGX server platform which offers NVLink GPU-to-GPU bandwidth. So not only can you virtualize GPUs, you can do it in the public cloud.

Who would want that infrastructure? Any organization running CUDA based ML training, or even traditional HPC workloads. These are just infrastructures, you need to understand the app if you’re the one who is architecting the solution. If you need a 101 on how ML or HPC apps work (both are underpinnings of AI apps), and architectures for them, check out the VMworld presentation I did a couple of years ago with Tony Foster.

These types of apps need lots and lots of server to server or VM to VM communication – and that communication needs to happen much quicker than traditional networking transports can handle, and process lots and lots more data compute in a lower amount of time than CPU architectures can handle. Once you understand that data scientists will figure out which algorithm is to be used, as an ops person you can figure out the architectures to support them.

Hot Take 2: Virtualize GPUs with VMware Bitfusion

VMware Bitfusion is now GA and available to VMware customers as a part of vSphere. This means if you are a current ENT+ customer, you can download Bitfusion as an “add-on” that is accessed via an OVA ( check the install guide on the vmware.com HardwareAccelerators page). There is also a nominal fee per GPU.

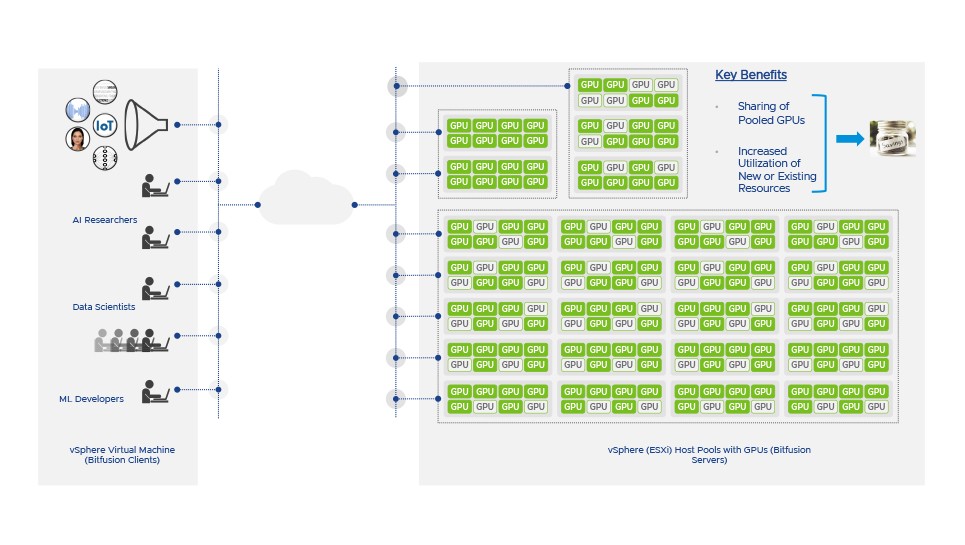

So what does Bitfusion bring to a ML/AI architecture? Bitfusion can virtualize GPUs, meaning an app can run anyplace and access the GPU when it’s time to do the processing. I’m sure they will have some great presentations at VMworld on how all this works.

Virtualize GPUs with Bitfusion

Events

Info Sources

YouTube is a great data source, all the kids are using and so should you!

Real Talk

If you don’t understand what ML/DL/AI is, and you’re responsible for architecting solutions, you need to get your learn on. Also, if you’re a traditional server vendor, you need to really understand that HPC is happening in the cloud, using virtual machines.

Times are changing, I think we’re going to need to hold onto our hats for the next few years as we try to keep up!