AI is suddenly the hot new term. Microsoft has a multibillion-dollar investment in the artificial intelligence lab OpenAI. ChatGPT, OpenAI’s break-out application, has many people declaring a new era for work, education, and society in general.

With so much hype around these tools, how can you be sure that your organization doesn’t fall for AI FUD (fear, uncertainty, and doubt) when making a purchasing decision?

Should we be afraid of AI?

It is curious that during the GPT4 release press tour, OpenAI CEO Sam Altman admitted he is a little bit scared of his organization’s large language models {LLM). He said the public should be unhappy if he said he was not afraid.

Not long after that, other leaders published an open letter asking those working on large to pause AI development for six months because “AI labs locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one – not even their creators – can understand, predict, or reliably control.”

Both statements fit all the definitions of FUD – they spread fear, uncertainty, and doubt about powerful new platforms. The best way to conquer FUD is with knowledge, so let’s discuss steps to counteract AI FUD.

First step to avoid AI FUD: Understand AI

In our last post (How do you teach a machine to be intelligent), we explained how to teach machines to be intelligent, which is the original definition of AI. Since AI is the new hotness, we are going to start seeing more applications marketed as AI, when they are really other technologies under that AI umbrella such as machine and deep learning.

For instance, Chat-GPT is simply a chat box interface to GPT-3 (and now GPT-4). GPT stands for Generative Pre-trained Transformer 3. It is a large language models (LLM) that was trained to look at words in a prompt and determine what words to bring back, and to bring them back in certain formats (emails, papers, resumes, etc.)

Know what you’re investing in, especially if the AI feature raises a service’s price. This AI definitions document from Stanford can help you learn the AI terminology, helping you become an informed buyer. After all, why spend your budget on a tool that has just been AI-washed if won’t really solve your problems?

Step 2: Know what the service was trained to do

You also want to question what the service was trained to do. Chat-GPT is a language model, it was trained to write stories, emails, etc. Try to determine any limitations of the service so you’re not surprised when it doesn’t suit your team’s requirements.

Step 4: Do you know what data was used to train the model?

It is important to understand where the data was gathered for the training datasets. Is there a possibility that the data was obtained without the consent of the creators, opening risk for your organization?

One remedy could be if queries can return annotated data, so you can prove the source of the data you extract.

Bonus step: Will the service negatively impact your ESG initiatives?

It is very possible that an AI service could impact your ESG ((environmental, social, and governance) score. There are two areas to consider:

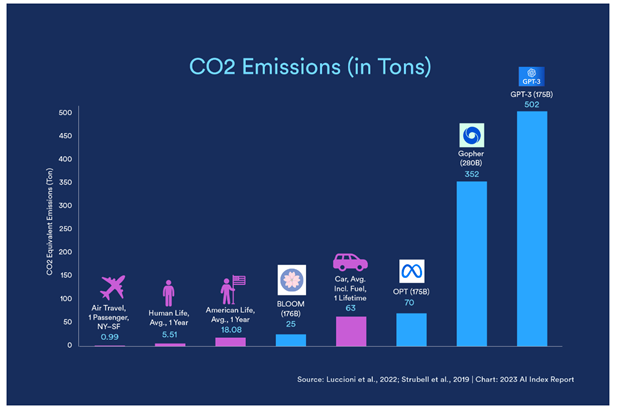

- Environmental. It takes an incredible amount of compute power to train LLMs. In the Stanford University Human-Centered Artificial Intelligence 2023 State of AI in 14 charts blog post, they report that GPT-3 emitted 502 tons of C02 emissions to train.

This is a valid question to ask of AI service providers if you have an ESG initiative.

- Social. The paper On the dangers of Stochastic Parrots: Can Language Models Be Too Big? points out a few social challenges that are surfacing in the world of large language models. They point out that language models have a tendency to amplify biases and other issues in the training data.

This is especially true if data sets are created by scraping the internet, as is the case of the GPT data sets. Here are some of the bias problems that could be inadvertently encoded in the training data gathered from public sources:

- Subtle patterns like referring to women doctors as if doctor itself entails not-woman

- Referring to both genders excluding the possibility of non-binary gender identities

- Through directly contested framings (e.g. undocumented immigrants vs. illegal immigrants or illegals),

- Language that is widely recognized to be derogatory (e.g. racial slurs).

It get worse. Once this language is taught to the language models, the bias will be reproduced in text by the chat interface. Then, the model’s users will share the encoded bias in the content they create.

This can lead to “more text in the world that reinforces and propagates stereotypes and problematic associations, both to humans who encounter the text and to future LMs trained on training sets that ingested the previous generation LM’s output.”

Don’t let AI FUD scare you away from AI

There is sure to be a lot more AI FUD and AI washing as we start moving into the post digital transformation era. But not everyone is pushing FUD. Bill Gates wrote a newsletter proclaiming the age of AI has begun. Of course Microsoft has invested billions of dollars in OpenAI. It was interesting that Gates is much more positive about AI than OpenAI’s founder. He does mention some of the things we’ll need to worry about.

Even the authors of the Stochastic Parrots paper are not negative about AI. They continue to keep the risks front and center, but they are not sharing the AI FUD either. Like any good scientist, they see ways to build and train these models to minimize these risks.

For operations people, this is our new norm. Remember: neural networks are workloads! To counteract AI FUD treat AI services the same way you’ve always treated new workloads that you evaluate – learn what questions to ask, from the basics to performance requirements.

Shine some sunshine on the topic!

If you’d like help getting started, we’d love to help you orient to this new world. Please reach out for a complimentary consultation.